Multithreading: Part 1

It’s time for the fourth edition of Richard’s Arcade Tips! This time around, we’re going to be talking about multithreading! This post was inspired by @Galcake’s recent question on the subject. I wrote an answer in that thread, but wanted to give a more detailed discussion of threads in Arcade and how they work.

This is a pretty complex subject, so I’m going to split it into multiple posts:

-

In Part 1, we’re going to be learning what exactly threads are and how they’re implemented in Arcade.

-

In Part 2, we’ll learn more about what blocks in Arcade create new threads and how to use them properly in your games!

With that out of the way, let’s get started!

What are threads?

First off let’s talk briefly about what threads really are. In the simplest terms, threads are what allow a program to do more than one thing at a time.

All programs running on a computer have state associated with them. This state includes memory (all the variables in the program) and a pointer to whatever instruction is currently executing in that program. As the program runs, this pointer moves from one instruction to the next, running each instruction in sequence and updating the program’s memory. The instruction pointer in this case is a single “thread” of execution. In a multi-threaded program, each thread has its own instruction pointer that can run independently of the others while still sharing the same memory.

To make this a little easier to understand, let’s use a metaphor!

Imagine you and some friends are walking around a grocery store with a shopping cart and a list of things to buy. To complete your errand, you need to find every item on that list and add it to your cart. One way to do this task would be for all of your friends to walk around as one unit and go down the list item by item, one at a time. This would be the single-threaded way of doing things. There is one instruction pointer which is the current item that you and your friends are all looking for together.

Let’s rewrite this scenario to use multiple threads. First off, we can divide our shopping list into multiple sublists (dairy, fish, fruit, etc). Then, each friend can take one of these sublists and go looking for the items to bring back to the shopping cart. Now we have multiple threads going! Each friend has a pointer to the item they are currently looking for, but we still have one shared memory pool that everyone is using (the shopping cart).

Scheduling

CPUs typically have a set number of threads that the hardware can run simultaneously. For example, the cpu of the laptop I’m currently typing this post on supports 8 simultaneous threads. But what happens when the number of threads is greater than the number a CPU can support? Well, that’s when a special program called a scheduler steps in to manage things.

The scheduler is in charge of telling threads when to run and when to stop so another thread can use the CPU. When it’s time to switch threads, the scheduler will pause the execution of the currently running thread and start running another on the CPU. Then, eventually, that thread will get paused so another can run and so on and so forth. There are two strategies for choosing when to switch threads. The first is to wait for a thread to volunteer to stop running so that another can use the CPU. This is called non-preemptive scheduling (“preempt” in this context means “to interrupt”). The other way is to interrupt threads without asking permission, which is called preemptive scheduling.

Going back to our earlier metaphor, each friend represents a CPU hardware thread and each sublist of items is a thread in our program. If we had more sublists then we have friends, one person could act as a scheduler and tell each friend which sublist they should be working on.

If we were to use non-preemptive scheduling, our scheduler would wait to switch threads until a friend declares they are bored of dairy and would really appreciate going to a different department, maybe veggies if that would be okay with everyone. Then the scheduler would choose a new list for that person/CPU to work on.

If we were to use preemptive scheduling, our scheduler would instead use the PA system of the grocery store to bark orders at each person/CPU telling them to switch whenever the scheduler feels like it and really making you question whether they’re your friend at all.

Threading in MakeCode

Now that we understand threads, what does multithreading look like in MakeCode Arcade?

Arcade has a single virtual CPU that is capable of running one thread at a time. Because we can have more than one thread in our program, that means that Arcade also has a scheduler to choose which thread is currently running on our virtual CPU. Arcade’s scheduler uses non-preemptive scheduling to choose a thread to run, meaning it waits for the current thread to give up control before starting another.

So why should I care about threads?

In MakeCode Arcade’s game engine, there is one main thread that executes most of the game logic. This thread needs to do a lot of things for each frame of your game, including physics for all of the sprites, tilemap collisions, rendering the screen etc. Arcade games typically run at 30FPS, which means that the game engine needs to do all this work in less than 0.033 seconds. That’s pretty fast!

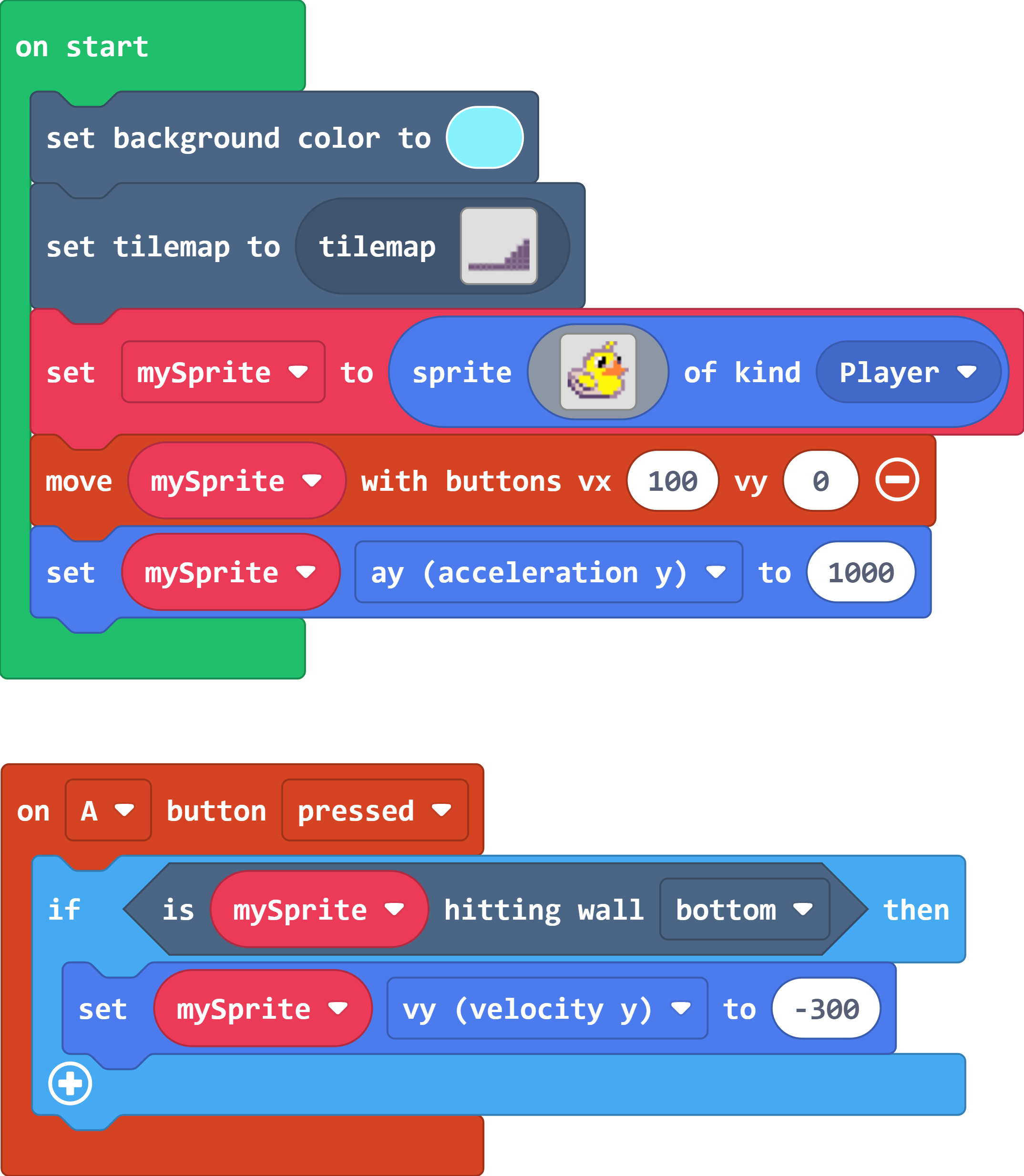

Most of the code that you write in MakeCode Arcade gets executed on this main game engine thread. If any part of the code running on that thread pauses, that means the entire rest of the frame will be delayed until that code stops pausing. To understand this better, let’s take a look at an example. Here’s the code for a simple platformer:

Here is a link to that project. Try playing it to get a feel for how it works.

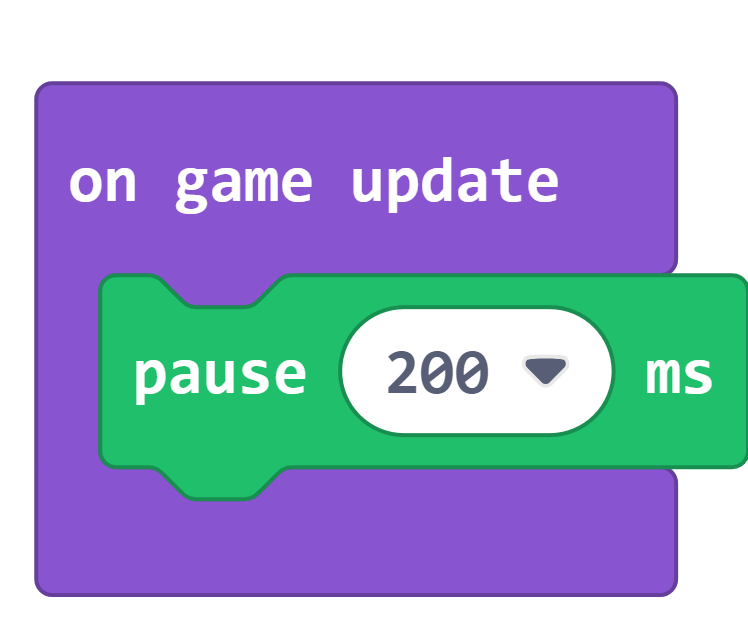

What happens if we put a pause in the game loop of this project? Let’s test it by adding this code to our game:

Try playing the game again and you’ll notice we are now running at approximately one FPS. The game engine can’t redraw the screen until all code on the game loop thread finishes running, so we have effectively forced our game to run slower by pausing.

This example might seem a little contrived (why would you put a pause in an on game update?), so let’s try something a little more realistic. Say I wanted to play a quick melody when the player overlaps with a tile. That code might look something like this:

Note how we have the melody set to play “until done”; this means that the block will pause until all the notes have finished playing. This should be fine, right? The last code was running inside an “on game update”, which sounds like it’s part of the game loop, but this is an event! It probably runs inside its own thread!

Well, try out the new version of the game here.

When the player overlaps the flag, the game freezes until the melody stops playing. That’s because tile overlap events run on the main game thread. In fact, most events in Arcade run on the main game thread. If we want to have code that pauses, we need to find a way to create our own thread that we can pause without interrupting the main thread.

End: Part 1

Thanks for reading!

Tune in for Part 2, where I will share more example code for using threads in your Arcade projects! Will there be a Part 3? Who knows! Depends on how long Part 2 is!